The AI system DALL·E creates images from textual descriptions. The photorealistic results are impressive.

Laocoön was the first known security specialist: he recognized that the interior of the Trojan horse was jam-packed with Greek heroes. But the gods sent two sea serpents who dispatched the ancient security expert in no time, and so it was with Troy.

The famous Laocoon group depicts the poor whistleblower with his sons in the stranglehold of the serpents. How would it look if a Greek sculptor had created a marble statue of an IT specialist based on the Laocoon group’s model? The AI system DALL·E 2 shows possible answers.

Administrator in the style of the Laocoön group (3 images)

Multimodal model

In January 2021, OpenAI also aroused great interest outside of the machine learning communities: the company presented DALL E and CLIP to the public. The former was a milestone in the world of multimodal approaches, as the multiple neural network framework generated photorealistic images after simple text input. Therefore, OpenAI ironically named it DALL E as a portmanteau of the names of the famous surrealist and likeable robot from the Pixar film WALL-E.

To clear up the confusion surrounding the numbers behind the name: DALL·E (also known as DALL·E 1 in hindsight) was never publicly available. OpenAI released the completely revised version with a new architecture in April 2022 as DALL E 2. In the meantime, the company likes to forget the 2 and only speaks of DALL·E at the start of the beta, for example.

The beginnings

DALL·E 1 was not the first ML model to evaluate texts semantically and translate them into visuals. It’s not the first text-to-image AI model. There were previously implementations that worked, for example, on the basis of Generative Adversarial Networks (GAN), about which a group around Ian Goodfellow published an article in 2014. GAN consist of two artificial neural networks: generator and discriminator, which have been trained on vast amounts of image material. The generator tries to create images that are visually as close as possible to the training dataset. The discriminator has the task of unmasking the results as fakes. After the iterative counterplay, images emerge that the discriminator no longer recognizes as generated.

Two years later, researchers from Michigan University and the Max Planck Institute for Computer Science (Saarbrücken) published the paper “Generative Adversarial Text to Image Synthesis” in 2016. The idea behind this is to translate the visual concepts from the text into pixels. The text-to-image mapping already existed in the training data set, whose creators had manually labeled all of the visual data. The quality of the process was still far from a photo-realistic representation. The procedure described in the paper has been implemented several times, including in Scott Ellison Reed’s Text to Image API web application. The system created interesting images for simple inputs. However, the results for complex text templates were insufficient. This was probably also due to the fact that the data set for the training mainly contained images of animals and objects.

Text to Image web application (3 images)

Text to Image: The “bird” prompt produced something bird-like.

Five years later, a completely new application appeared with DALL·E. Instead of GANs, OpenAI used their GPT-3 transformer for this. Transformer models, which Google, among others, has developed in addition to OpenAI, work with a self-attention mechanism: they pay attention to the data entered (prompts) and to their own results. This creates coherent content. The gigantic NLP model GPT-3 (Generative Pre-trained Transformer) from OpenAI writes the logical texts with content-relevant links not without a literary touch.

The GPT-3 Transformer can understand a wide variety of content, link it together and create a self-contained conclusion. The following example makes it clear that the system firstly knows Goethe’s writing style, secondly, what a TV commercial is, and thirdly, it is able to organically combine the two very different concepts:

The first version of DALL·E used the 12 billion parameter version of GPT-3. During training, she learned how to generate images from prompts. The basis was a data set of image-text pairs. CLIP, another artificial neural network from OpenAI, was used to match image and text and to rank the best visual results. Unlike DALL·E, it is available as open source software and has found many followers among AI artists.

The capabilities of DALL E were already convincing in 2021: The model understood the text input and generated suitable images.

DALL·E 1 was the first step of OpenAI’s research project and never reached the light of the public. Only a small group of researchers and community ambassadors, including the author of this article, had access to the system. DALL·E’s knowledge and skills surprised from the start.

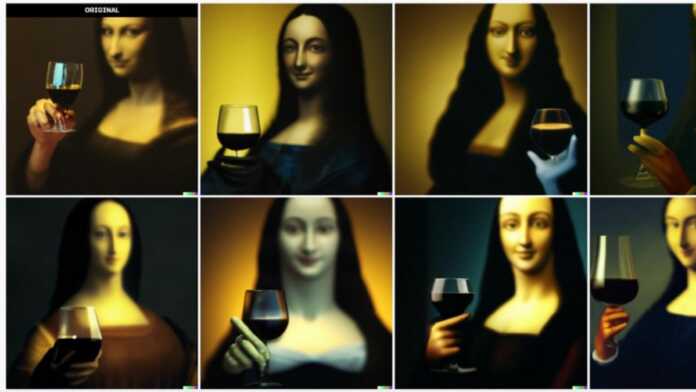

The result of the Mona Lisa drinking wine with da Vinci showed the narrative power of the system: the elegantly raised wine glass with the Gioconda reflected in it can certainly be understood as a reference to da Vinci’s self-portrait theories.

The author has created additional images with DALL·E 1. (4 pictures)

Prompt: “Statue of Keanu Reeves in style of Michelangelo”

DALL E 1 had some limitations:

- the resolution was low: 256×256 px,

- it was trained only for English-language contexts, and any unclear input simply produced images of nature and

- the picture quality wasn’t the best.

In winter 2022, OpenAI demonstrated the newer version of DALL E, which was significantly more powerful.