On the occasion of the Global Accessibility Awareness Daythe world accessibility day of May 19, Apple announced in preview from the Announcements on the subject that in the near future – “within the end of the year” – will arrive on iPhone, iPad, Apple Watch and Mac. It is an example – says the Apple – of how hardware, software and machine learning can act synergistically to facilitate the visually impaired in everyday life, and this is the case of Door Detectionthose who live with physical and motor disabilities, Apple Watch Mirroring with Voice control And Switch controland still the hearing impaired who will be able to use Live Captionsas well as VoiceOver in 20 new languages.

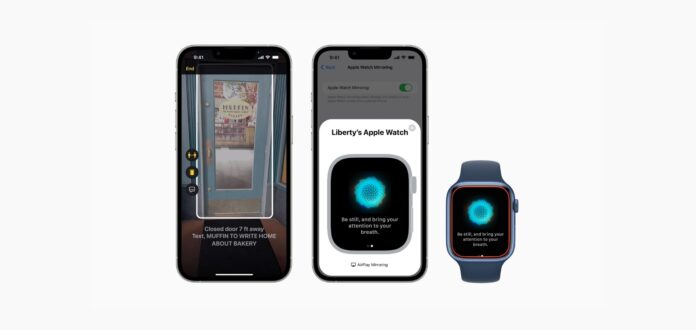

Door Detection is a novelty that will help the blind and visually impaired to locate a door. But not only: it will give information on how far it is, on any indications present as a label, even if it is open or closed and in this case how to open it, if you simply need to push or pull, while if there is a handle it suggests also whether it is rotated clockwise or counterclockwise.

In short, Door Detection promises to X-ray every entrance thanks to the LiDAR sensor of iPhones and iPads that have onenamely iPhone 12 Pro and Pro Max, 13 Pro and Pro Max, iPad Pro 11 “second and third generation and 12.9” fourth and fifth generation.

Step forward coming for Apple Watch in terms of accessibility. The Cupertino smartwatch, thanks to Mirroringcan be controlled through the functions Voice control And Switch control iPhone instead of having to necessarily touch the display with the fingertip. In addition, there will be new quick actions thanks to which it will be possible to carry out with generic touches actions that now require specific actions, such as answering a call or pausing a workout.

For the hard of hearing, Apple has announced the arrival of Live Captions, subtitles in real time in the wake of those that Google introduced on the Pixel some time ago. Their operation is as trivial as it is useful for those who need it: any content that includes audio will be transcribed live on the screen.

On Mac there will also be the option of type an answerfor example on call, to read to the interlocutor. The content, just like with the similar Google function, will be processed on the device, so there is no need to fear for privacy. VoiceOveras mentioned at the beginning, it will get 20 additional languages, including Bengali, Bulgarian, Catalan, Ukrainian and Vietnamese.

We are happy to present these innovations, an expression of the creative and innovative spirit of Apple’s teams used to offer users more opportunities to use our products in a way that is better suited to the needs and daily life of each – said Sarah Herrlinger, Senior Director of Accessibility Apple’s Policy and Initiatives.

Apple has announced a preview of other news, minor, for accessibility: you can consult them through the link in SOURCE.