Artificial intelligence (AI) continues to evolve and expand its capabilities in the field of imaging. The company Stability AI has announced the beta version of its latest model, Stable Diffusion XL (SDXL), which promises to take hyperrealism to another level in the business field.

Stable Diffusion XL: A qualitative leap

The SDXL model is based on 2.3 billion parameters, making it considerably more powerful than previous models. Among its improvements are the advanced generation of faces, a higher quality in the composition of images and the ability to produce legible text.

SDXL not only allows images to be generated from text, but also includes new features such as image-to-image prompting, that is, the possibility of obtaining variations of one image from another. In addition, it has inpainting and outpainting technologies, which allow you to reconstruct missing parts of an image or extend it coherently, respectively.

They use the label ‘XL’ because this model is trained using 2.3 billion parameters, while previous models were in the 900 million parameter range. Although the SDXL model is an architecture enhancement of the 2.0 model, the 3.0 models are still in development.

List of improvements over previous versions of Stable Diffusion

Improvements in Stable Diffusion XL (SDXL) over previous versions of Stable Diffusion include:

- Increased number of parameters: SDXL has 2.3 billion parameters, compared to 900 million parameters for previous models. This allows for greater learning and performance of the model.

- Improved hyper-realism: The SDXL model offers a level of detail and quality in image generation that exceeds previous versions, which translates into more realistic images.

- Advanced face generation: SDXL has improved its ability to generate human faces, making them more realistic and consistent in terms of facial features and expressions.

- Improved Image Composition: The SDXL model is capable of creating images with more elaborate and coherent composition, resulting in more convincing and realistic scenes.

- Human-readable text output: Unlike previous models, SDXL has a greater ability to output human-readable text on images, which can be useful in applications such as rendering banners or illustrations with text.

- Image-to-image prompting functionality: SDXL goes beyond the traditional text-to-image approach and includes the ability to generate variations of one image from another image.

- Inpainting and outpainting: The SDXL model offers the possibility of reconstructing missing parts of an image (inpainting) and coherently extending an existing image (outpainting).

These improvements make SDXL a more powerful and versatile model compared to previous versions of Stable Diffusion, expanding its possible applications in various industries and creative fields.

Is it comparable to Midjourney?

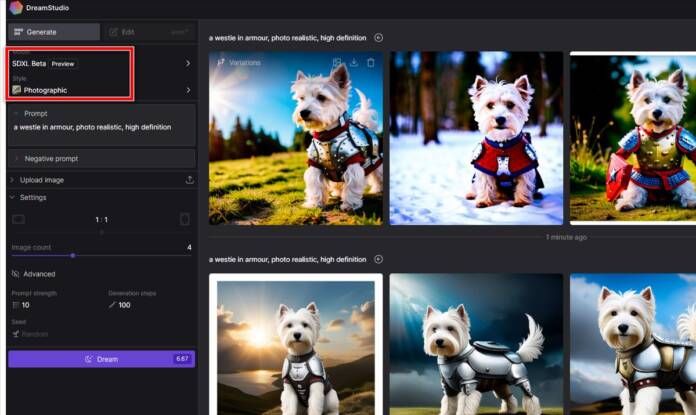

In the first tests, not yet. With the prompt: a westie in armour, photo realistic, high definition, the new model of SDXL generates this in photo mode:

While Midjourney continues with its impressive results:

You can try it at beta.dreamstudio.ai.

In search of the balance between creativity and ethics

Stability AI has faced challenges in the field of intellectual property, with artists opposing the use of their works as training data for Stable Diffusion models. The company has collaborated with the Spawning organization to honor requests from artists not to use their work in training future models.

The company has been the subject of copyright infringement lawsuits related to the creation of art through artificial intelligence, including image agency Getty Images, which sued over alleged misuse of its images.