Functionalities of Sensors:

- Perception and Sensing:

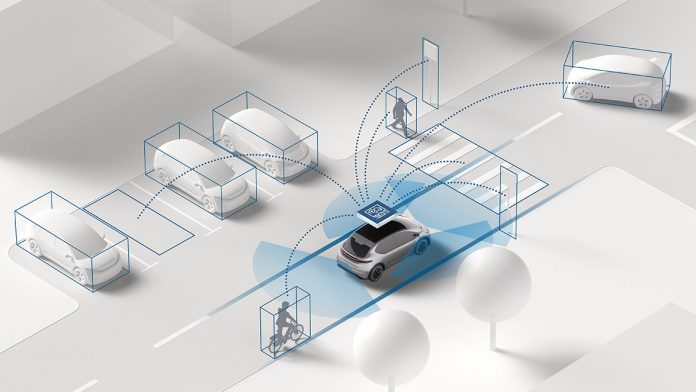

Sensors enable robots to perceive and sense the world around them, providing valuable information about the environment, objects, and events. Vision sensors capture visual data, while proximity sensors detect obstacles, and force sensors measure contact forces. By integrating multiple sensors, robots can create comprehensive situational awareness and make informed decisions in real-time.

Perception and Sensing in Robotics: Enabling Intelligent Interaction with the Environment

In the vast landscape of robotics, perception and sensing represent foundational components that enable robots to interact intelligently with their environment. By gathering and interpreting data from various sensors, robots can perceive their surroundings, make informed decisions, and adapt to dynamic conditions. From detecting obstacles to recognizing objects and navigating complex environments, perception and sensing play a pivotal role in enhancing the autonomy, safety, and efficiency of robotic systems. Let’s delve into the intricacies of perception and sensing in robotics, exploring their principles, functionalities, and transformative impact on robotic capabilities.

Principles of Perception and Sensing:

Perception and sensing in robotics encompass a wide range of sensory modalities and techniques, each serving specific purposes in gathering information about the environment. These principles include:

- Sensor Modalities:

Robots utilize diverse sensor modalities, including vision, LiDAR (Light Detection and Ranging), radar, sonar, inertial measurement units (IMUs), GPS (Global Positioning System), and more. Each sensor modality provides unique capabilities for detecting and measuring different aspects of the environment, such as visual features, distances, velocities, and orientations.

Sensor Modalities in Robotics: Exploring the Spectrum of Perception

In the dynamic landscape of robotics, sensor modalities serve as the eyes and ears of robots, enabling them to perceive, interpret, and interact with their environment. From capturing visual information to detecting sound waves and measuring physical forces, sensor modalities encompass a diverse array of technologies that provide robots with crucial insights into the world around them. Understanding the principles, functionalities, and applications of sensor modalities is essential for harnessing the full potential of robotic systems. Let’s embark on a journey to explore the spectrum of sensor modalities in robotics, uncovering their roles, capabilities, and transformative impact.

Principles of Sensor Modalities:

Sensor modalities encompass a wide range of technologies that detect and measure different aspects of the environment. Each modality operates based on specific principles and physical phenomena, allowing robots to gather information from various sensory channels. Some fundamental principles of sensor modalities include:

Electromagnetic Sensing:

Electromagnetic sensors, such as cameras, LiDAR (Light Detection and Ranging), and radar, operate by emitting or receiving electromagnetic waves to detect objects, measure distances, and capture spatial information. These sensors utilize principles such as light reflection, wave propagation, and Doppler effect to perceive the environment.

Acoustic Sensing:

Acoustic sensors, including microphones and ultrasonic transducers, detect sound waves and vibrations to analyze auditory signals, localize sound sources, and detect objects based on echolocation principles. Acoustic sensing is used in applications such as speech recognition, environmental monitoring, and object detection in underwater or low-visibility environments.

Mechanical Sensing:

Mechanical sensors measure physical forces, pressures, strains, and deformations using principles such as piezoelectricity, strain gauges, and capacitive sensing. These sensors are used for detecting touch, pressure, motion, and structural integrity in robotic systems, industrial automation, and human-robot interaction.

Chemical Sensing:

Chemical sensors detect and measure the presence of specific chemicals, gases, or substances in the environment using principles such as gas chromatography, mass spectrometry, and chemiresistive sensing. These sensors are employed in applications such as environmental monitoring, industrial safety, and gas detection in hazardous environments.

Biological Sensing:

Biological sensors, inspired by biological organisms and systems, measure biological signals, physiological parameters, and biomarkers to monitor health, behavior, and biological processes. Examples include biosensors for detecting biomolecules, bioelectrodes for measuring electrical signals, and biopotential sensors for monitoring vital signs.

Functionalities of Sensor Modalities in Robotics:

Sensor modalities provide robots with critical functionalities that enable them to perceive, interpret, and interact with the environment effectively:

Perception and Environment Understanding:

Sensor modalities enable robots to perceive the environment by capturing sensory data from multiple channels, including visual, auditory, tactile, and environmental cues. By integrating data from different sensors, robots can build comprehensive representations of the environment, detect objects, navigate obstacles, and localize themselves accurately.

Object Detection and Recognition:

Vision sensors, LiDAR, and radar enable robots to detect and recognize objects in the environment, such as people, vehicles, obstacles, and landmarks. Object detection and recognition facilitate various tasks, including navigation, manipulation, grasping, and interaction in industrial, service, and domestic settings.

Navigation and Localization:

Sensor modalities, such as GPS, IMUs (Inertial Measurement Units), and LiDAR, provide robots with localization and navigation capabilities, allowing them to determine their position, orientation, and trajectory relative to the environment. Navigation and localization are essential for autonomous robots operating in indoor, outdoor, and dynamic environments.

Human-Robot Interaction:

Sensor modalities enable robots to interact intelligently with humans by perceiving human gestures, expressions, and intentions. Vision sensors, depth cameras, and microphones allow robots to recognize human faces, gestures, and speech, facilitating natural and intuitive communication in social robotics, healthcare, and service applications.

Environmental Monitoring and Surveillance:

Sensor modalities, such as cameras, environmental sensors, and acoustic arrays, enable robots to monitor and surveil the environment for various purposes, including security, surveillance, and environmental monitoring. By continuously scanning the environment and analyzing sensor data, robots can detect anomalies, identify safety hazards, and provide real-time situational awareness in critical scenarios.

Advancements and Future Directions:

Recent advancements in sensor modalities have focused on enhancing sensor capabilities, improving sensor performance, and enabling integration with robotic systems. Emerging trends include the development of lightweight and low-power sensors, high-resolution imaging systems, intelligent sensor fusion techniques, and advanced machine learning algorithms for perception and decision-making.

As robotics technology continues to advance, the integration of diverse sensor modalities will play a crucial role in enabling more autonomous, adaptive, and intelligent robotic systems. From autonomous vehicles and drones to industrial robots and service robots, sensor modalities will continue to drive innovation and expand the capabilities of robotics in diverse domains. By leveraging the principles of sensor modalities, robots can navigate complex environments, interact seamlessly with humans, and fulfill a wide range of tasks with precision and efficiency, paving the way for a future of intelligent and responsive robotics.

- Data Fusion:

To obtain a comprehensive understanding of the environment, robots often employ sensor fusion techniques that integrate data from multiple sensors. Sensor fusion enables robots to compensate for individual sensor limitations, improve accuracy, and enhance overall perception capabilities. Common fusion techniques include sensor-level fusion, feature-level fusion, and decision-level fusion.

Data Fusion in Robotics: Enhancing Perception, Decision-Making, and Autonomy

In the realm of robotics, where seamless interaction with the environment and accurate decision-making are essential, data fusion emerges as a critical component. Data fusion techniques enable robots to integrate information from multiple sensors, sources, and modalities to form a comprehensive understanding of the environment. By combining disparate data streams, robots can enhance perception, improve localization, and make informed decisions in dynamic and uncertain environments. Join us as we explore the intricacies of data fusion in robotics, uncovering its principles, functionalities, and transformative impact on robotic systems.

Principles of Data Fusion:

Data fusion involves the integration and analysis of data from multiple sensors, sources, or modalities to produce a unified representation of the environment. These principles guide the process of data fusion:

Sensor Fusion:

Sensor fusion techniques integrate data from different sensors, such as cameras, LiDAR, radar, and IMUs, to compensate for individual sensor limitations, improve accuracy, and enhance overall perception capabilities. Sensor fusion can be performed at various levels, including sensor-level fusion, feature-level fusion, and decision-level fusion.

Temporal Fusion:

Temporal fusion techniques combine data collected over time to track dynamic changes in the environment, such as object motion, trajectory prediction, and temporal relationships. Temporal fusion enables robots to maintain a consistent and up-to-date representation of the environment, even in dynamic or rapidly changing scenarios.

Spatial Fusion:

Spatial fusion techniques integrate data collected from different spatial locations or viewpoints to create a comprehensive spatial representation of the environment. Spatial fusion enables robots to build accurate maps, localize themselves accurately, and understand spatial relationships between objects and landmarks.

Multi-Modal Fusion:

Multi-modal fusion techniques combine data from multiple modalities, such as visual, auditory, and tactile sensors, to exploit complementary information and enhance perception capabilities. Multi-modal fusion enables robots to perceive the environment more robustly and accurately by leveraging diverse sensory channels.

Probabilistic Fusion:

Probabilistic fusion techniques use probabilistic models and inference algorithms to fuse uncertain and noisy sensor data, incorporating uncertainty measures into the fusion process. Probabilistic fusion enables robots to reason about uncertainty, make informed decisions, and maintain robustness in uncertain environments.

Functionalities of Data Fusion in Robotics:

Data fusion techniques provide robots with critical functionalities that enhance perception, decision-making, and autonomy:

Enhanced Perception:

Data fusion enables robots to create a comprehensive and accurate representation of the environment by integrating data from multiple sensors and modalities. By combining visual, auditory, and tactile information, robots can perceive objects, obstacles, and landmarks more reliably and robustly.

Improved Localization and Mapping:

Data fusion techniques improve localization and mapping capabilities by integrating data from multiple sensors, such as GPS, IMUs, LiDAR, and cameras. By fusing information about position, orientation, and spatial features, robots can build accurate maps, localize themselves precisely, and navigate autonomously in complex environments.

Object Detection and Tracking:

Data fusion enables robots to detect and track objects in the environment more effectively by integrating data from multiple sensors and sources. By combining information about object appearance, motion, and context, robots can detect objects accurately, track their trajectories, and predict future movements.

Decision-Making:

Data fusion techniques support informed decision-making by providing robots with a comprehensive and reliable understanding of the environment. By integrating data from diverse sensors and modalities, robots can analyze complex scenarios, assess risks, and choose optimal actions based on probabilistic inference and uncertainty reasoning.

Adaptive and Robust Operation:

Data fusion enables robots to adapt to changing environmental conditions and maintain robustness in uncertain or dynamic scenarios. By continuously integrating and updating sensor data, robots can respond to new information, mitigate sensor failures or inconsistencies, and maintain reliable operation in challenging environments.

Advancements and Future Directions:

Recent advancements in data fusion techniques have focused on enhancing fusion algorithms, improving sensor capabilities, and enabling real-time processing and analysis. Emerging trends include the development of deep learning-based fusion models, multi-modal fusion frameworks, and distributed fusion architectures for distributed robotic systems.

As robotics technology continues to advance, the integration of advanced data fusion capabilities will play a crucial role in enabling more autonomous, adaptive, and intelligent robotic systems. From autonomous vehicles and drones to industrial robots and service robots, data fusion techniques will continue to drive innovation and expand the capabilities of robotics in diverse domains. By leveraging the principles of data fusion, robots can enhance perception, decision-making, and autonomy, paving the way for a future of intelligent and responsive robotics.

- Perception Algorithms:

Perception algorithms play a crucial role in processing sensor data and extracting meaningful information about the environment. These algorithms include computer vision algorithms for object detection and recognition, SLAM (Simultaneous Localization and Mapping) algorithms for mapping and localization, sensor calibration algorithms, and machine learning techniques for pattern recognition and classification.

Perception Algorithms in Robotics: Unraveling the Secrets of Sensory Understanding

Perception algorithms form the backbone of robotic systems, enabling them to interpret and make sense of the vast array of sensory data collected from the environment. These algorithms play a crucial role in processing visual, auditory, tactile, and other sensor inputs to extract meaningful information, detect objects, recognize patterns, and make informed decisions. By harnessing the power of machine learning, computer vision, and signal processing techniques, perception algorithms empower robots to navigate complex environments, interact with objects, and fulfill a wide range of tasks autonomously. Let’s delve into the intricacies of perception algorithms in robotics, exploring their principles, functionalities, and transformative impact on robotic capabilities.

Principles of Perception Algorithms:

Perception algorithms encompass a wide range of techniques and methodologies for processing sensor data and extracting relevant information about the environment. These algorithms operate based on principles such as:

Feature Extraction:

Feature extraction algorithms identify and extract distinctive features or patterns from sensor data, such as edges, corners, textures, or keypoints. These features serve as building blocks for higher-level perception tasks such as object detection, recognition, and tracking.

Pattern Recognition:

Pattern recognition algorithms analyze sensor data to identify and classify patterns or objects based on learned patterns or statistical models. These algorithms leverage machine learning techniques such as supervised learning, unsupervised learning, and deep learning to recognize objects, gestures, facial expressions, or other patterns in sensory inputs.

Spatial and Temporal Processing:

Spatial and temporal processing algorithms analyze the spatial and temporal characteristics of sensor data to detect changes, track motion, and infer spatial relationships. These algorithms enable robots to perceive object motion, depth information, and spatial layouts in the environment.

Probabilistic Inference:

Probabilistic inference algorithms reason about uncertainty and ambiguity in sensor data, incorporating probabilistic models and Bayesian inference techniques to make informed decisions. These algorithms enable robots to assess the likelihood of different hypotheses, estimate uncertainty, and adapt their behavior based on uncertain or incomplete information.

Sensor Fusion:

Sensor fusion algorithms integrate data from multiple sensors and modalities to create a unified representation of the environment. These algorithms combine information from sensors such as cameras, LiDAR, radar, and IMUs to compensate for individual sensor limitations, improve accuracy, and enhance overall perception capabilities.

Functionalities of Perception Algorithms in Robotics:

Perception algorithms provide robots with critical functionalities that enable them to perceive, interpret, and interact with the environment effectively:

Object Detection and Recognition:

Perception algorithms enable robots to detect and recognize objects in the environment, such as people, vehicles, obstacles, and landmarks. By analyzing visual, auditory, or tactile information, robots can identify objects, classify them into categories, and infer their properties or attributes.

Scene Understanding and Semantic Segmentation:

Perception algorithms analyze sensor data to understand the spatial layout of the environment, segmenting scenes into meaningful regions and identifying objects or structures of interest. Semantic segmentation algorithms classify pixels or voxels in sensor images into semantic categories, such as roads, buildings, vegetation, or pedestrians, enabling robots to understand the context of their surroundings.

Localization and Mapping:

Perception algorithms enable robots to localize themselves within the environment and create accurate maps of the surrounding space. Simultaneous Localization and Mapping (SLAM) algorithms integrate sensor data to estimate the robot’s pose and map the environment’s features, allowing robots to navigate autonomously and localize themselves accurately.

Gesture and Action Recognition:

Perception algorithms analyze sensor data to recognize human gestures, actions, and intentions, facilitating natural and intuitive interaction between robots and humans. By interpreting visual, auditory, or tactile cues, robots can understand gestures, recognize commands, and respond appropriately to human actions or instructions.

Anomaly Detection and Situation Awareness:

Perception algorithms enable robots to detect anomalies, abnormalities, or unexpected events in the environment, enhancing situational awareness and safety. By analyzing sensor data for deviations from normal patterns or behaviors, robots can alert users to potential hazards, anomalies, or critical events in real-time.

Advancements and Future Directions:

Recent advancements in perception algorithms have focused on improving accuracy, robustness, and efficiency through deep learning, neural networks, and probabilistic modeling techniques. Emerging trends include the development of real-time perception algorithms, lightweight and low-power implementations for edge computing, and multi-modal fusion frameworks for integrating data from diverse sensors and modalities.

As robotics technology continues to advance, the integration of advanced perception algorithms will play a crucial role in enabling more autonomous, adaptive, and intelligent robotic systems. From autonomous vehicles and drones to industrial robots and service robots, perception algorithms will continue to drive innovation and expand the capabilities of robotics in diverse domains. By leveraging the principles of perception algorithms, robots can perceive, interpret, and interact with the environment effectively, paving the way for a future of intelligent and responsive robotics.

- Environmental Modeling:

Robots create internal representations or models of the environment based on sensor data, enabling them to reason about spatial relationships, object properties, and task requirements. Environmental models may include geometric maps, semantic maps, occupancy grids, object models, and probabilistic representations of uncertainty.

- Sensor Feedback Loops:

To interact effectively with the environment, robots utilize sensor feedback loops that continuously monitor and update sensory information. These feedback loops enable robots to adapt their behavior in real-time based on changes in the environment, sensor noise, or unexpected events, ensuring robust and reliable operation.

Functionalities of Perception and Sensing in Robotics:

Perception and sensing capabilities empower robots with a wide range of functionalities that enhance autonomy, adaptability, and interaction with the environment:

- Obstacle Detection and Avoidance:

Sensors such as LiDAR, radar, and sonar enable robots to detect obstacles in their path and navigate safely in complex environments. By analyzing sensor data in real-time, robots can plan collision-free trajectories, avoid obstacles, and negotiate dynamic obstacles such as moving vehicles or pedestrians.

- Object Detection and Recognition:

Vision sensors and machine learning algorithms enable robots to detect and recognize objects in the environment, such as people, vehicles, furniture, and tools. Object detection and recognition facilitate various tasks, including object manipulation, grasping, sorting, and inspection in industrial, service, and household environments.

- Localization and Mapping:

SLAM algorithms enable robots to simultaneously localize themselves within an unknown environment and create a map of the environment’s features. By integrating data from sensors such as LiDAR, cameras, and IMUs, robots can build accurate maps, localize themselves relative to the map, and navigate autonomously without external infrastructure.

- Human-Robot Interaction:

Perception and sensing enable robots to interact intelligently with humans, understanding human gestures, expressions, and intentions. Vision sensors, depth cameras, and facial recognition algorithms allow robots to recognize human faces, gestures, and emotions, facilitating natural and intuitive communication in various applications, including social robotics, healthcare, and customer service.

- Environmental Monitoring and Surveillance:

Sensors such as cameras, LiDAR, and environmental sensors enable robots to monitor and surveil the environment for various purposes, including security, surveillance, and environmental monitoring. By continuously scanning the environment and analyzing sensor data, robots can detect anomalies, identify safety hazards, and provide real-time situational awareness in critical scenarios.

Advancements and Future Directions:

Recent advancements in perception and sensing technologies have focused on enhancing sensor capabilities, improving algorithms, and enabling robust integration with robotic systems. Emerging trends include the development of lightweight and low-power sensors, high-resolution imaging systems, intelligent sensor fusion techniques, and advanced machine learning algorithms for perception and decision-making.

As robotics technology continues to advance, the integration of advanced perception and sensing capabilities will play a crucial role in enabling more autonomous, adaptive, and intelligent robotic systems. From autonomous vehicles and drones to industrial robots and service robots, perception and sensing technologies will continue to drive innovation and expand the capabilities of robotics in diverse domains. By leveraging the principles of perception and sensing, robots can navigate complex environments, interact seamlessly with humans, and fulfill a wide range of tasks with precision and efficiency, paving the way for a future of intelligent and responsive robotics.