Google presents Minerva, an AI capable of solving math problems step by step

In recent years we have seen impressive advances in the world of artificial intelligence and, particularly, in the field of natural language processing. GPT-3 is the best example of this, but so is BERT. The “problem” is that, while these models shine at natural language tasks, They trail behind in quantitative reasoning. In math, for example.

Solving mathematical and scientific questions is not only processing language, but also requires analyzing sentences, mathematical notation, applying formulas and using symbols. It’s complex, to be sure, but Google researchers have published what they say is a “language model capable of solve mathematical and scientific questions through step-by-step reasoning“. Her name: Minerva.

A train leaves Madrid at a speed of 250 km/h and another from Barcelona at…

As explained by Ethan Dyer and Guy Gur-Ari, researchers in charge of the paper “Solving Quantitative Reasong Problems with Language Models“, Minerva solves quantitative reasoning problems by generating solutions that include numerical calculations and symbolic manipulation without depending on external tools, such as a calculator.

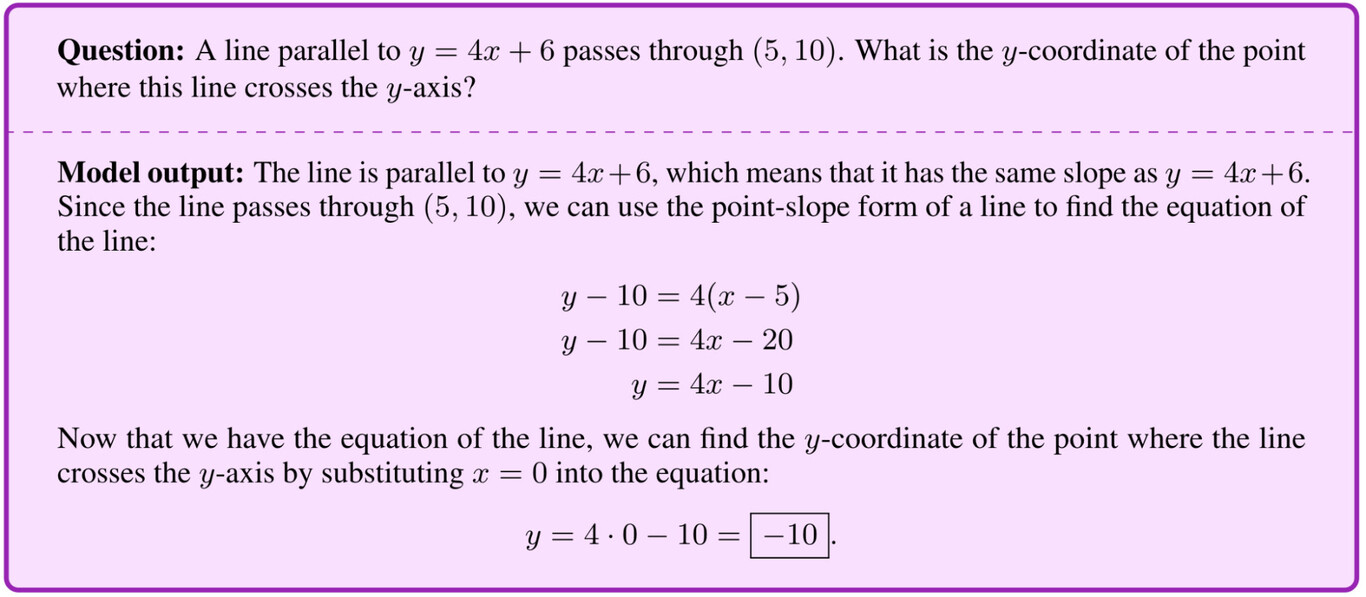

The model analyzes and answers mathematical questions by combining natural language and mathematical notation, so that the result is a complete and understandable explanation of the problem. For example, the problem below these lines, although many others from different fields can be found on GitHub.

Minerva is based on PaLM (Pathways Language Model), to which an additional training consisting of 118 GB of scientific articles from arXiv and web pages containing mathematical expressions in LaTeX and MathJax, among other formats. Basically, the model has learned to “converse using standard mathematical notation,” according to the researchers.

The operation, otherwise, is quite similar to other models of the language: several solutions are generated and Minerva assign probabilities to different outcomes. All solutions arrive (almost always) at the same answer, but with different steps. What the model does is use majority voting to choose the most common result and give it as the final answer.

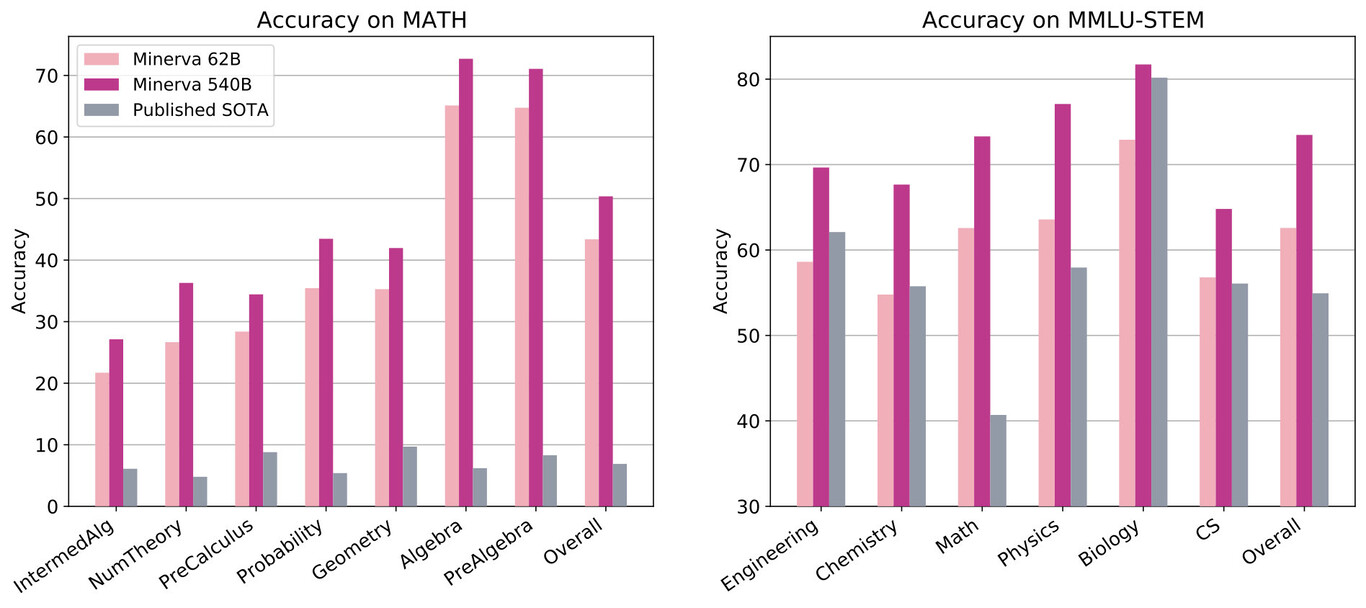

Benchmark results.

In the image above, published by Google, you can see the result of Minerva in different STEM benchmarks (Math, MMLU-STEM and GSM8k). According to Google, “Minerva gets cutting-edge results, sometimes by a wide margin.” However, the model is not perfect and also makes mistakes.

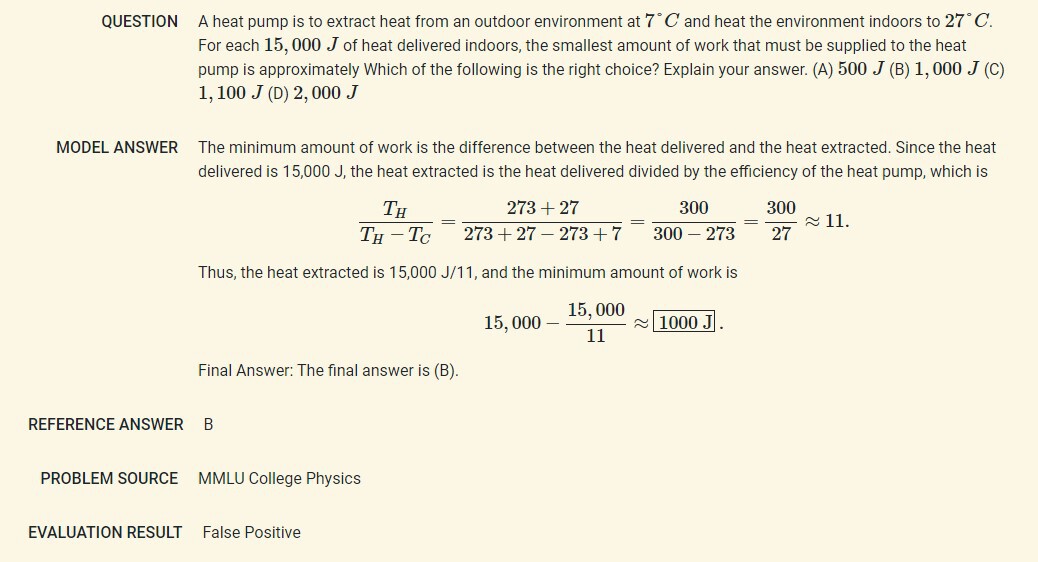

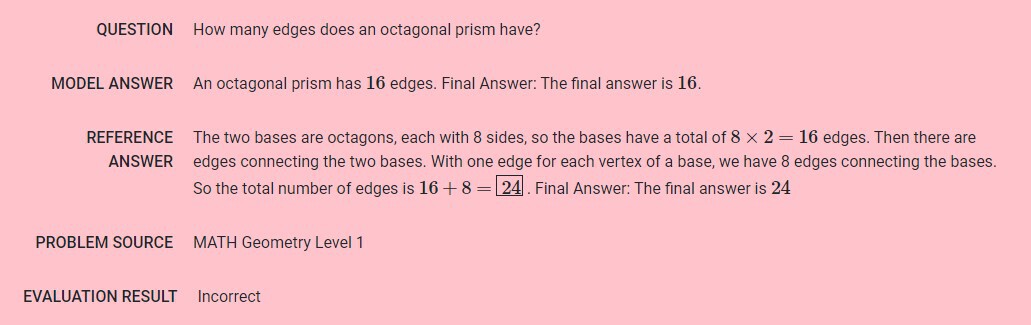

As detailed by Google, Minerva is wrong from time to time, although its errors are “easily interpretable”. In the researchers’ words, “about half are calculation errors, and the other half are reasoning errors, in which the solution steps do not follow a logical chain of thought.” Another option is that the model gets the correct answer with faulty reasoning (false positive). Below are a couple of examples.

False positive example.

Wrong question example.

Finally, the researchers point out that the model has some limitations, as the model responses cannot be automatically verified. The reason is that Minerva generates answers using natural language and LaTeX mathematical expressions, “with no explicit underlying mathematical structure”.

More information | Google