Apple yesterday introduced iOS 16 with improvements in functionality live textwhich uses in-device intelligence to recognize text in images on iOS, and now in videos as well.

Since yesterday, I am testing the new version of iOS and I thought to search for “dog” in the Photos app since, as far as I remember, I don’t have any dog photos.

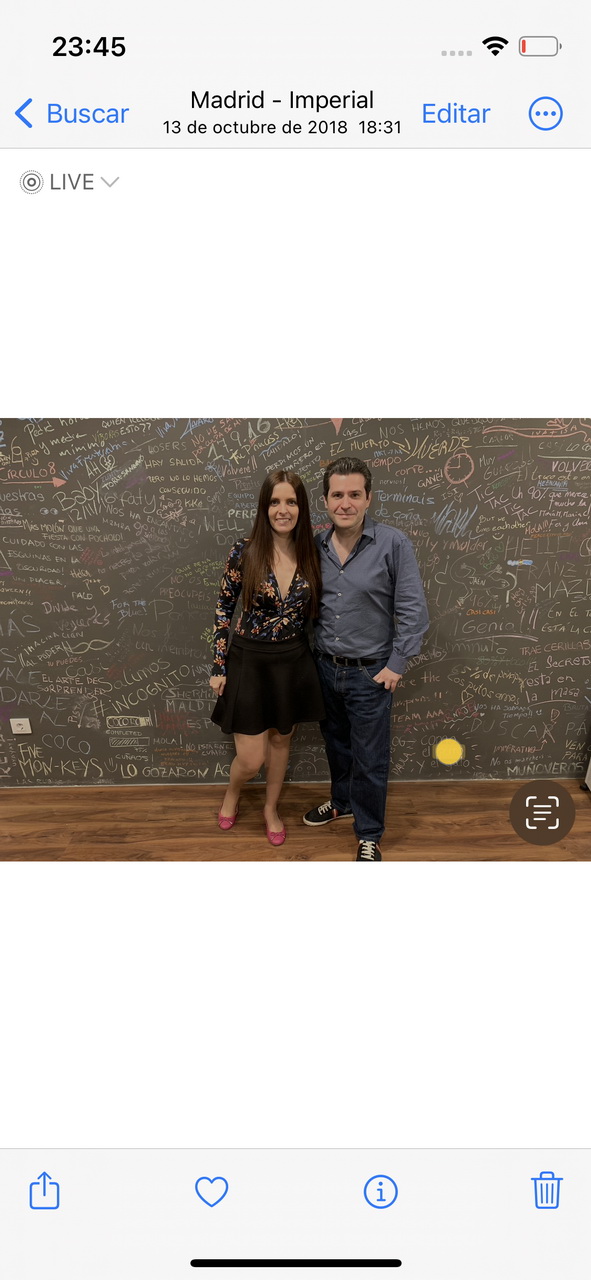

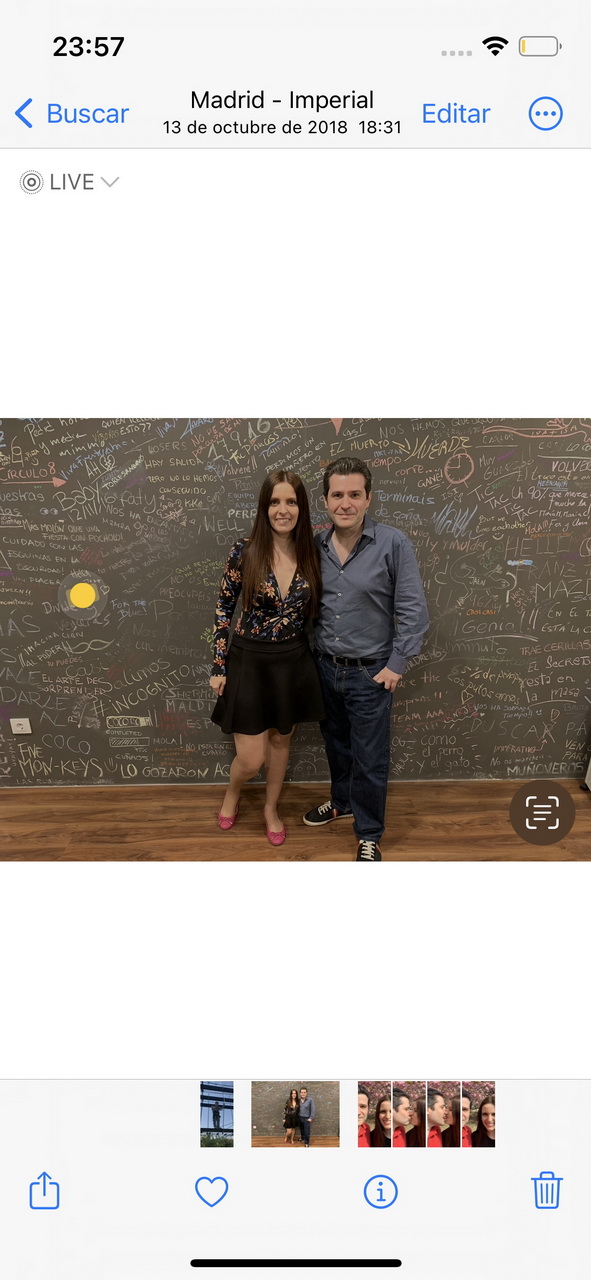

My surprise was when a photo appeared in the results where, of course there is no dogbut only some people posing in front of a blackboard full of notes made by other people who had passed by.

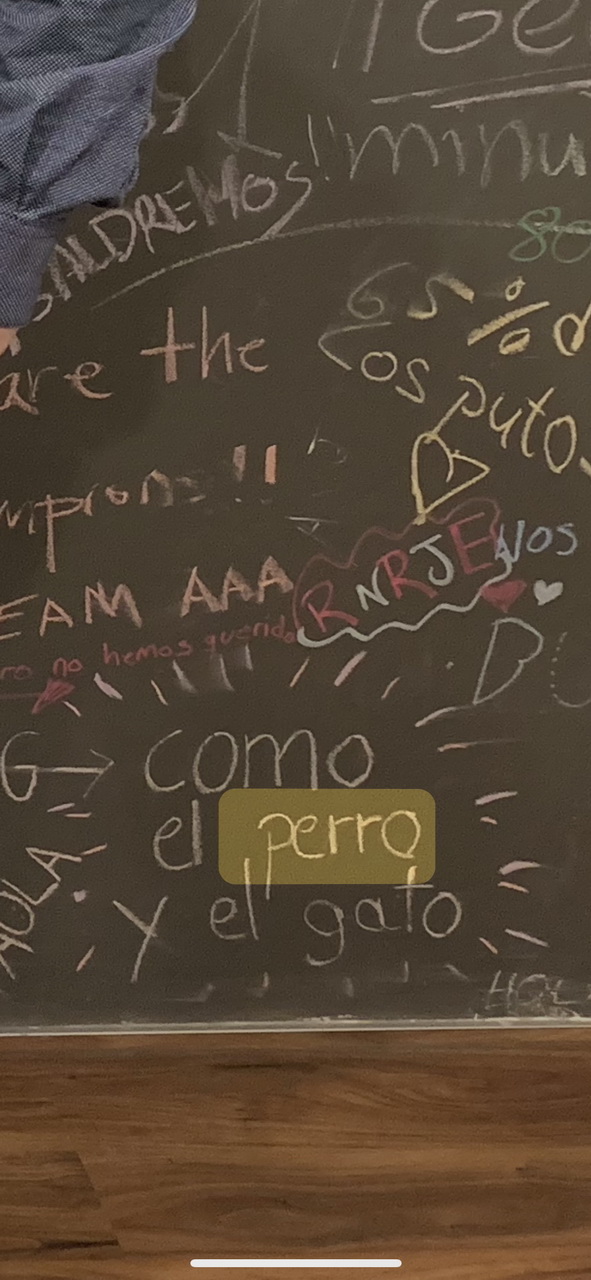

The curious thing is that, in fact, if you look closely, in an area of the blackboard it appears written “dog” (luckily, the app marks the place with a yellow dot).

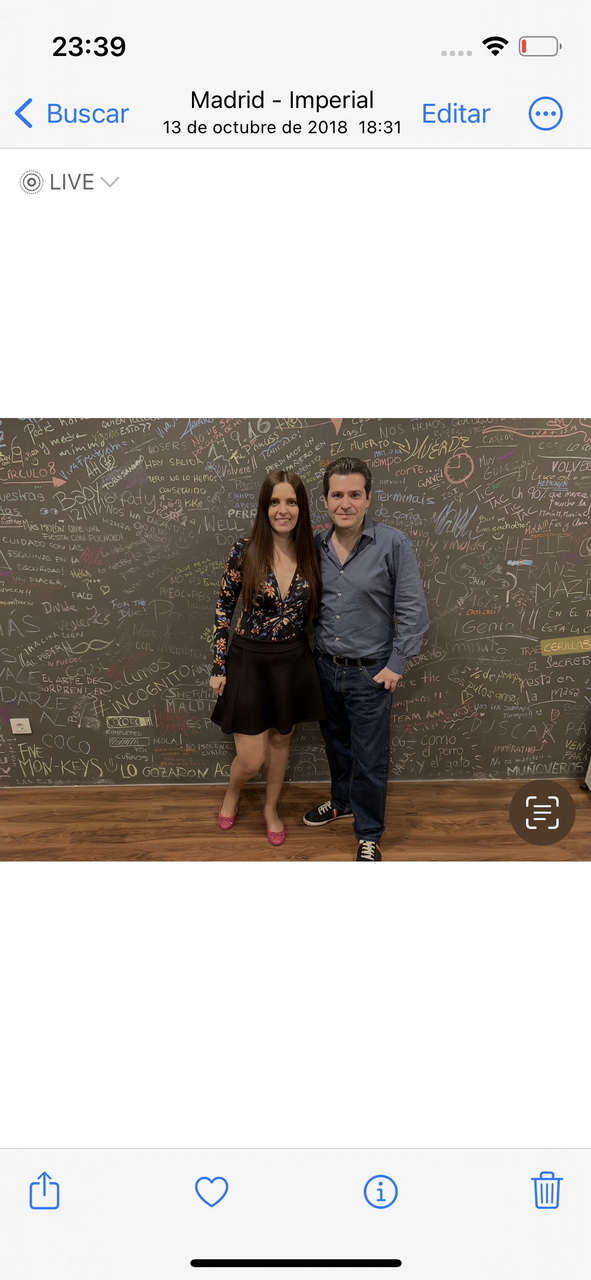

Next, I have searched for “matches” and, again, the marked place where this word is written has appeared. And even more merit is the word “palo”, which is hardly distinguishable.

- Dog

- Dog

- Dog

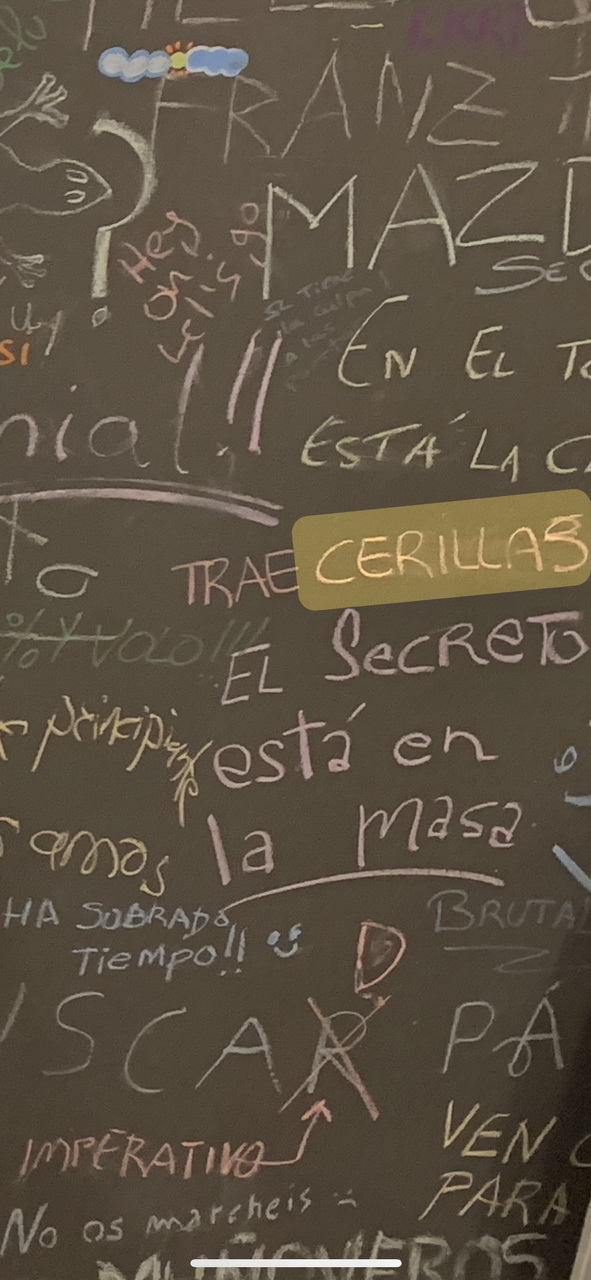

- matches

- matches

- matches

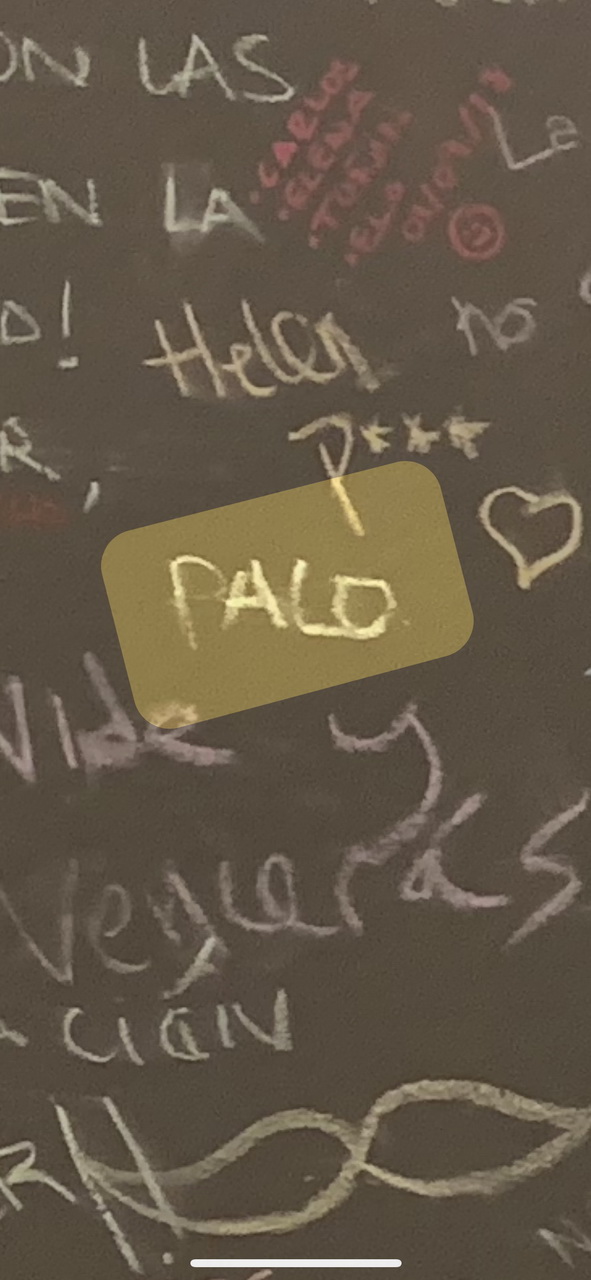

- Stick

- Stick

- Stick

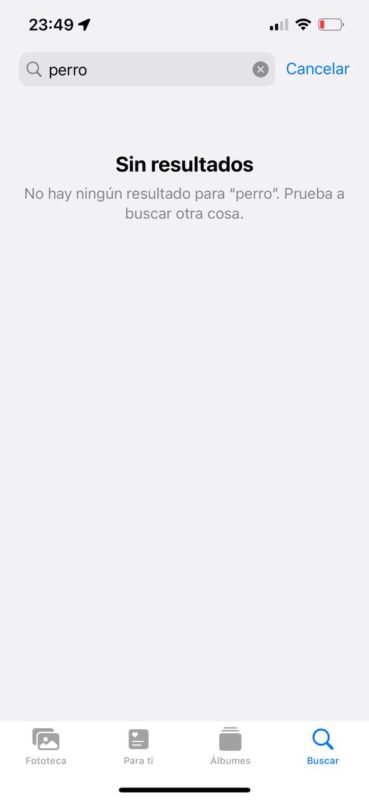

I have tried to perform the same search on another iPhone with iOS 15but I haven’t got any results.

iOS 15 can’t find any dogs

It is evident that Apple has improved the Live Text functionality significantly in this new version and, now, its capabilities remind us of those of Google Lens, which has always stood out for its ability to recognize text in images.