Google has just announced some new additions for the To fashionsthe new research experience enhanced by artificial intelligence, designed to make students comfortable in view of the return to school.

Many of the features in question are already known because borrowed from Gemini, despite Big G said not to fear overlaps with the assistant, or because they are already distributed to users even before the announcement. Let’s find out all the details.

Index:

- The fashions are enriched with new features

- Possibility to attach PDF and images

- The canvas functionality arrives

- The sharing of the video on Search Live is (also officially) in Rollout

- Search on what we see while navigating

The fashions are enriched with new features

It is not yet available in Italy, but the To fashions It continues to grow and progress, both in terms of diffusion (it is recently available also in India and the United Kingdom, as well as in the United States) and in terms of potential.

The latest additions are fresh fresh, just announced by Google through a dedicated post on the blog The Keyword. Specifically, the functions we will talk about below are mainly designed for students, parents, teachers but can return useful to all those who research. The availability is, in these early stages, limited to the desktop experience with the To fashions.

Possibility to attach PDF and images

In the experience with the To fashions As a desktop browser is about to debut the same experience already available on Google apps as regards the support for loading images and pdf files: users will thus be able to ask detailed questions also on files that will go to the research assistant themselves. In the future, support to the files from Google Drive will arrive.

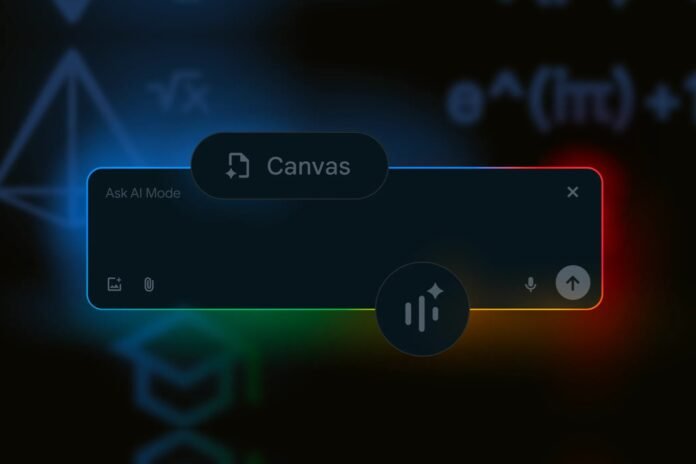

The canvas functionality arrives

To ensure that users can remain organized and keep track of information from different sources, Google has brought the functionality Canvas of Gemini also in the To fashions.

It allows you to create action plans, organize information within a dynamic side panel that is updated by going on with the research/development of the project. The output can then be perfected until it meets the user’s needs.

The support for the loading function will soon arrive which will allow users to perfect everything with the context of their files. The projects can be resumed and modified later.

The sharing of the video on Search Live is (also officially) in Rollout

We had anticipated it only a few hours ago but now the official announcement has also arrived: the Video flow sharing of the camera It is in rollout within the functionality Search Live from the To fashionsallowing users to ask questions to the research assistant about what they frame with the smartphone camera.

This potential, deeply integrated with Google Lens, is currently available only in the United States which are registered in the experiment through Search Labs.

https://www.youtube.com/watch?v=onwkhkvrvne

The latest news of the day involves the version of Lens integrated into the Chrome browser and the To fashions. Thanks to the collaboration between these functions, users can ask and find out what the content is present in the browser screen.

The functionality will be accessible via the new button Ask Google About This Page (Ask Google on this page), once again something very similar to what Gemini does when we invoke the overlay from an Android smartphone.

By clicking on this option, the browser will provide an overview generated by the AI which highlights the key information in the browser’s lateral panel. In the upper part of the results or through the button “Learn up” It will be possible to recall the To fashions To further deepen aspects related to the content on the browser screen.